An Introduction to SHAP Values and Machine Learning Interpretability

4.9 (154) · $ 7.00 · In stock

An Introduction to SHAP Values and Machine Learning Interpretability

9.6 SHAP (SHapley Additive exPlanations)

9.6 SHAP (SHapley Additive exPlanations)

Shapley Value For Interpretable Machine Learning

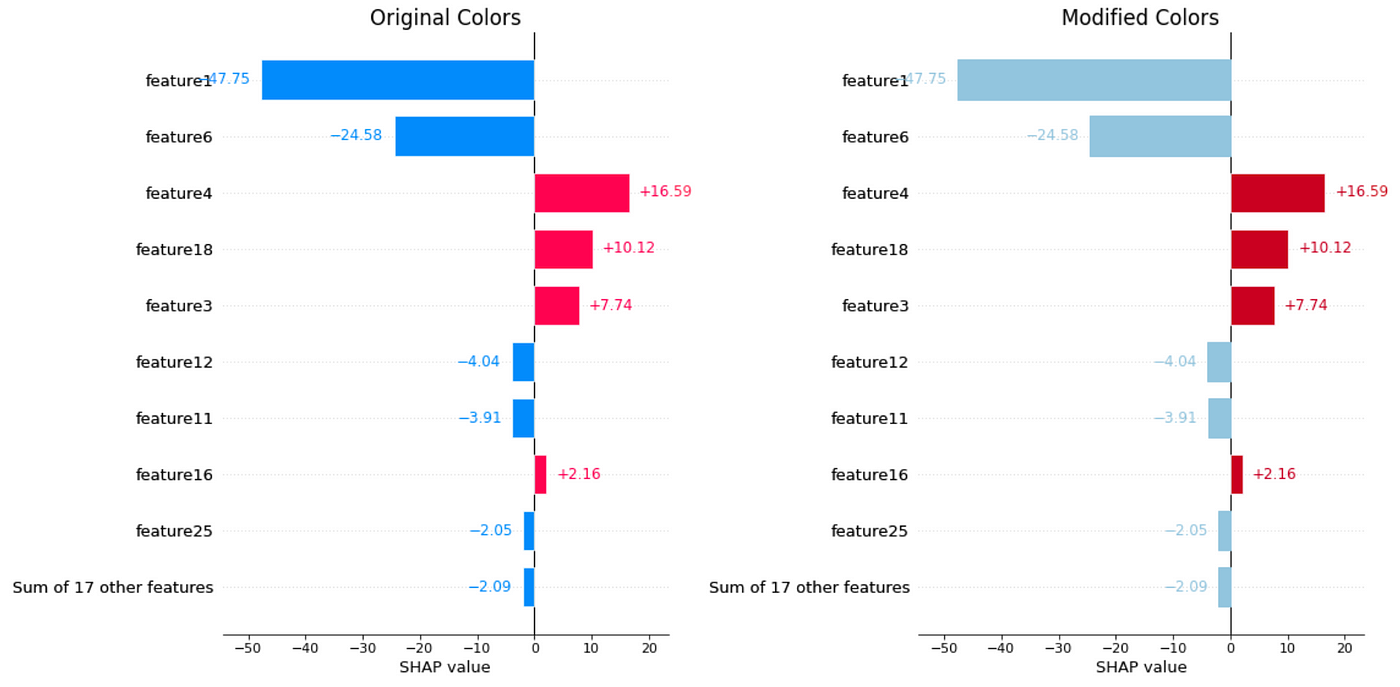

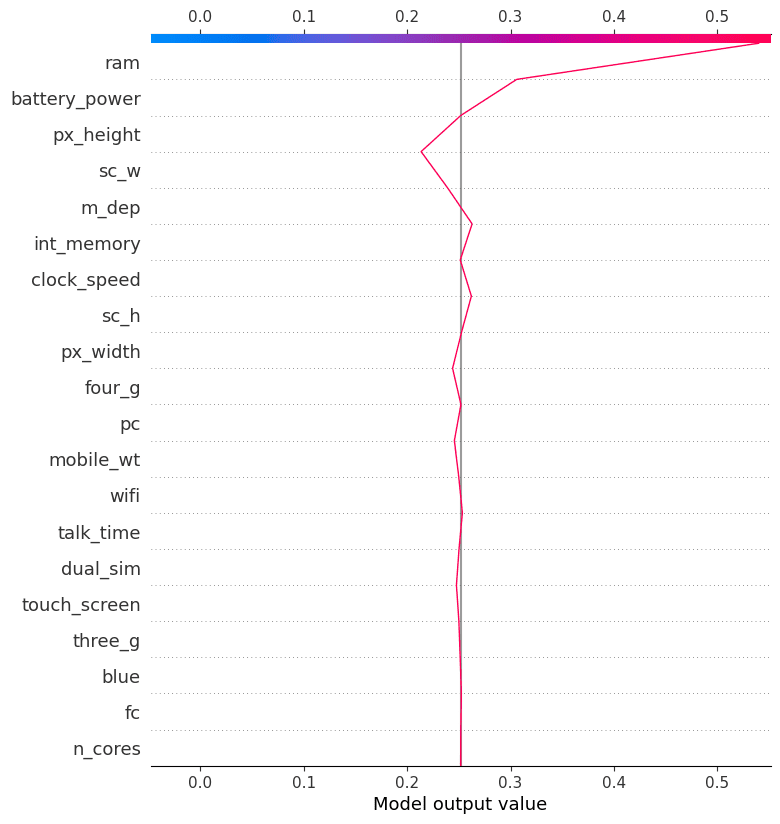

A Comprehensive Guide into SHAP Values

A gentle introduction to SHAP values in R

Ultimate ML interpretability bundle: Interpretable Machine Learning + Interpreting Machine Learning Models With SHAP

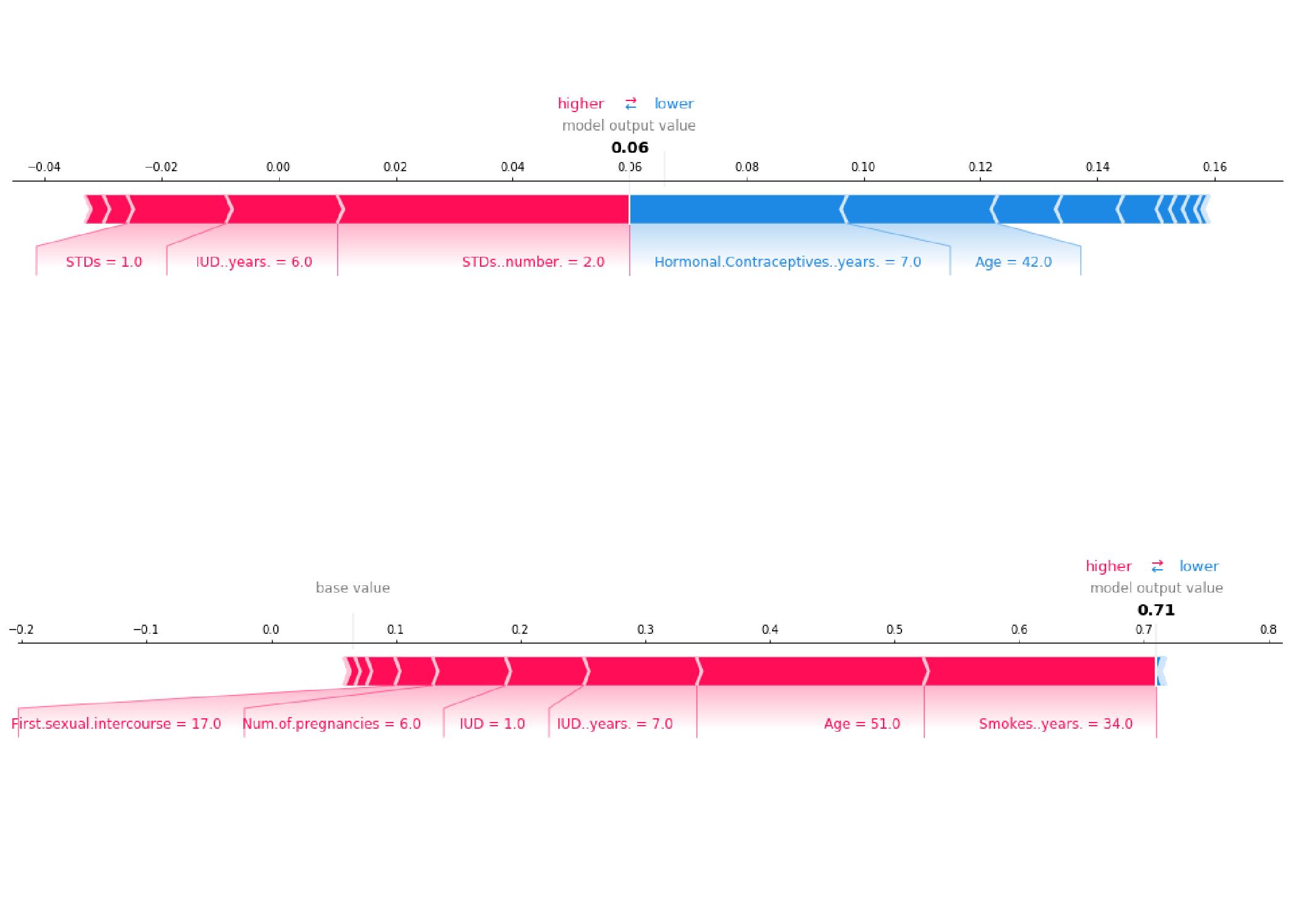

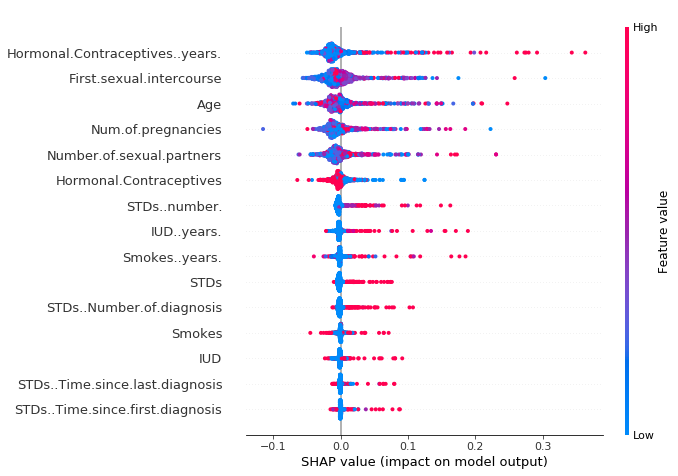

Cells, Free Full-Text

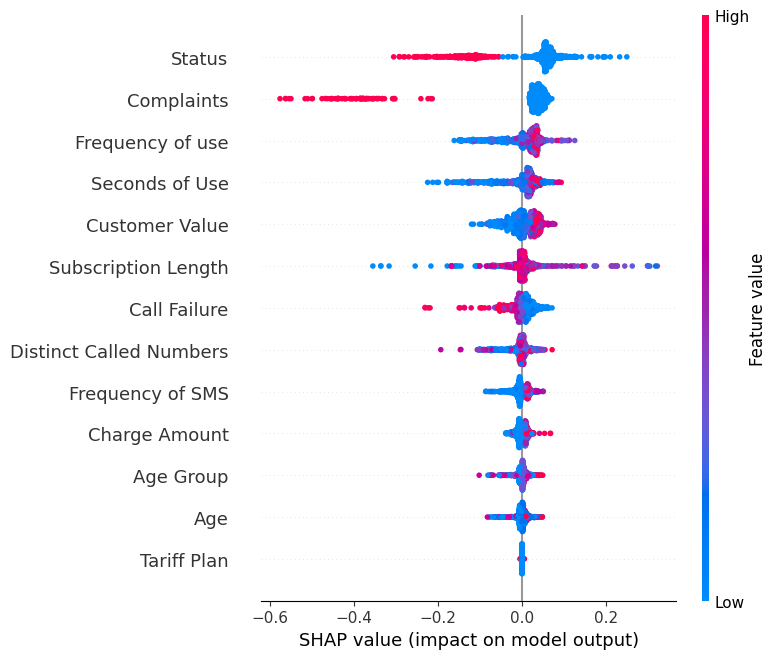

Explaining Machine Learning Models: A Non-Technical Guide to Interpreting SHAP Analyses

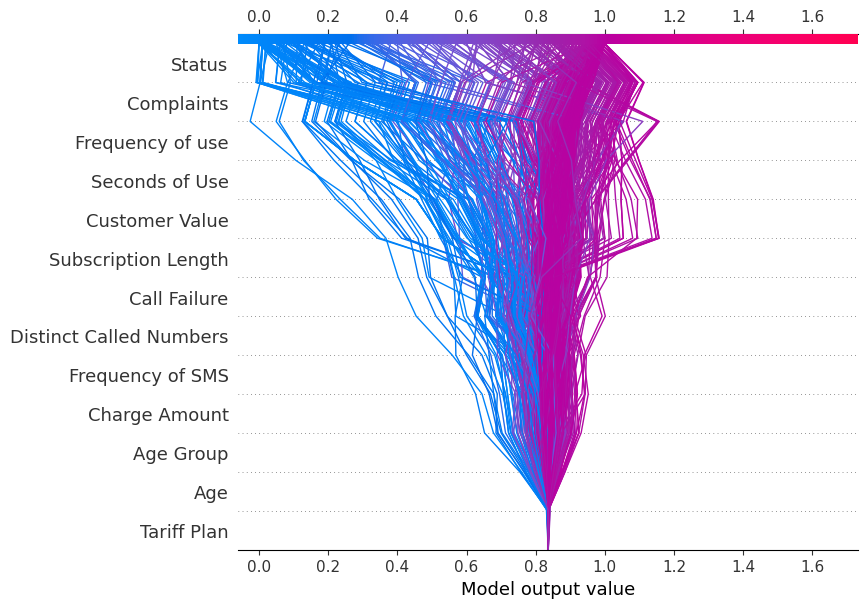

Explainable AI: Thinking Like a Machine, by Joseph George Lewis, Mar, 2024

Using SHAP Values for Model Interpretability in Machine Learning - KDnuggets

Explainable AI: Thinking Like a Machine, by Joseph George Lewis, Mar, 2024

Explainable AI explained!

Explainable Machine Learning, Game Theory, and Shapley Values: A technical review

Explaining Machine Learning Models: A Non-Technical Guide to Interpreting SHAP Analyses